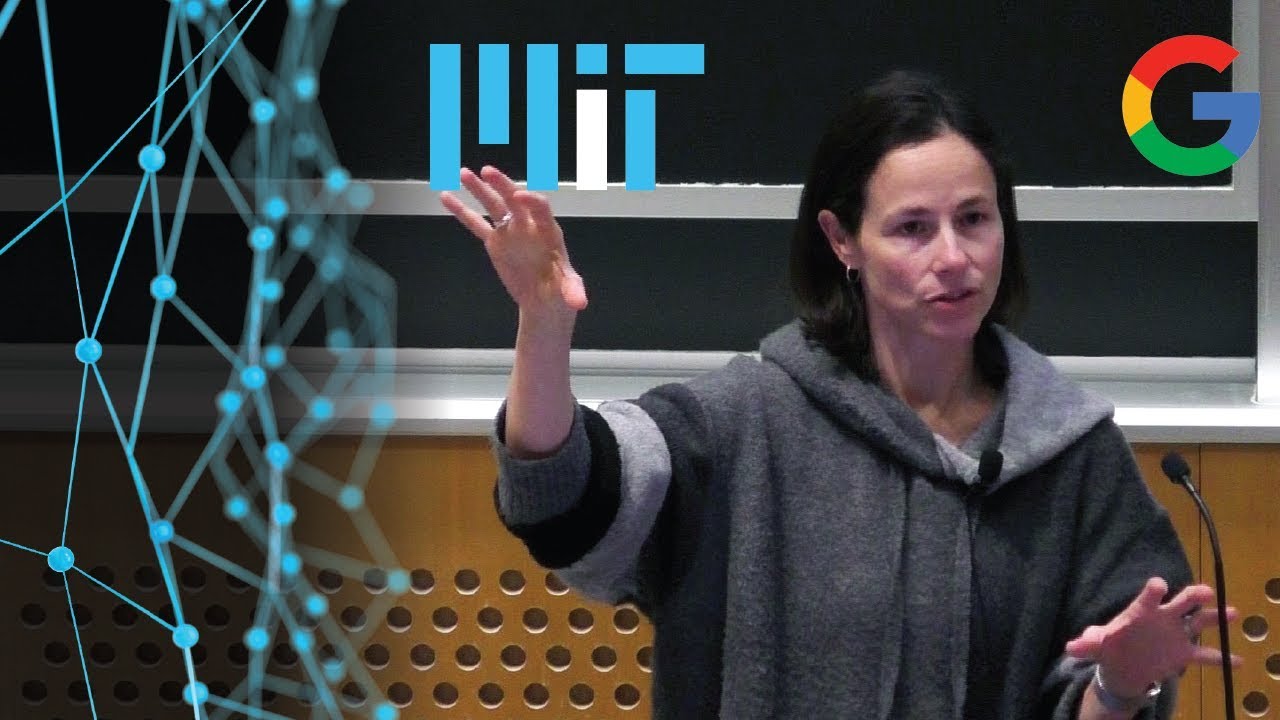

MIT Introduction to Deep Learning 6.S191: Lecture 7

Data Visualization for Machine Learning

Lecturer: Fernanda Viegas

Google Brain Guest Lecture

January 2019

For all lectures, slides and lab materials: .

Images related to the topic financial deepmind

MIT 6.S191 (2019): Visualization for Machine Learning (Google Brain)

Search related to the topic MIT 6.S191 (2019): Visualization for Machine Learning (Google Brain)

#MIT #6S191 #Visualization #Machine #Learning #Google #Brain

MIT 6.S191 (2019): Visualization for Machine Learning (Google Brain)

financial deepmind

See all the latest ways to make money online: See more here

See all the latest ways to make money online: See more here

Basimiza gelmedik bela kalmicak bunlar yüzünden 🙂

This lecture is a gem, and I'm left scratching my head as to why it has just a thousand likes; and seventeen dislikes — really? About word vector visualizations: just wondering if it even makes sense to try to get rid of biases in word vectors without curating every single bit of the corpus? Is there some way out of this?

woa

This is very inspiring video for me: in 2018 I was trying to develop the abstract model for emotion recognition from text, I called it 'semantic melodies' in connection with text tonalities. It was very poor, but at the same time I found that some words like spirit/inspiration/respiration are connected between at least English and Russian in the same way. Points shown in this video could help to develop those ideas further! I've saw few videos of words embeddings already, but this gives much greater taste. Thank you so much, I'm very grateful this channel exists, please keep your work going, I would be watching every new one video!

This is such important work!!

With visualizations like these we can begin to understand Neural Networks!!

She knows how to talk and present.

Mind blowing.

easy to make fool

Is it a graduate or undergraduate course ?

Great topic! Great talk!

Wow

Out of imagination concept! Moving towards Natural Language…

Good Presentation and informative on a deep level. You always explained in an impressive way. Can you please provide me your mail I'd.

sometimes there is no one-on-one mapping between 2 different languages, e.g., there is just no a corresponding word in language A for a to-be-translated word in language B, how will the computer deal with such case?

Mistake in cifar10, impressive !!!

Watching this after "Connections between physics and deep learning" by Max Tegmark is interesting (2016). He focuses a lot on things like locality that exist in the natural world we live in and neural networks. It is a very "aHa!" moment to see the languages behaving in such a local way in the embedding projector. I think that's very neat.

The multi-lingual embedding space is blowing my mind

thnx!

awesome talk! amazing tools!

<3

Great!