Let’s discuss the question: how to know if eigenvectors are linearly independent. We summarize all relevant answers in section Q&A of website Achievetampabay.org in category: Blog Finance. See more related questions in the comments below.

How do you prove that eigenvalues are linearly independent?

- We have to prove P(k) for all 0≤k≤n.

- An empty set is linearly independent by definition. Therefore, P(0) holds. Since eigenvectors are non-zero, S1 is linearly independent. Therefore, P(1) holds.

- Assume P(k) holds for 1≤k≤n. Therefore, Sk is linearly independent.

How many eigenvectors are linearly independent?

There are possible infinite many eigenvectors but all those linearly dependent on each other. Hence only one linearly independent eigenvector is possible. Note: Corresponding to n distinct eigen values, we get n independent eigen vectors.

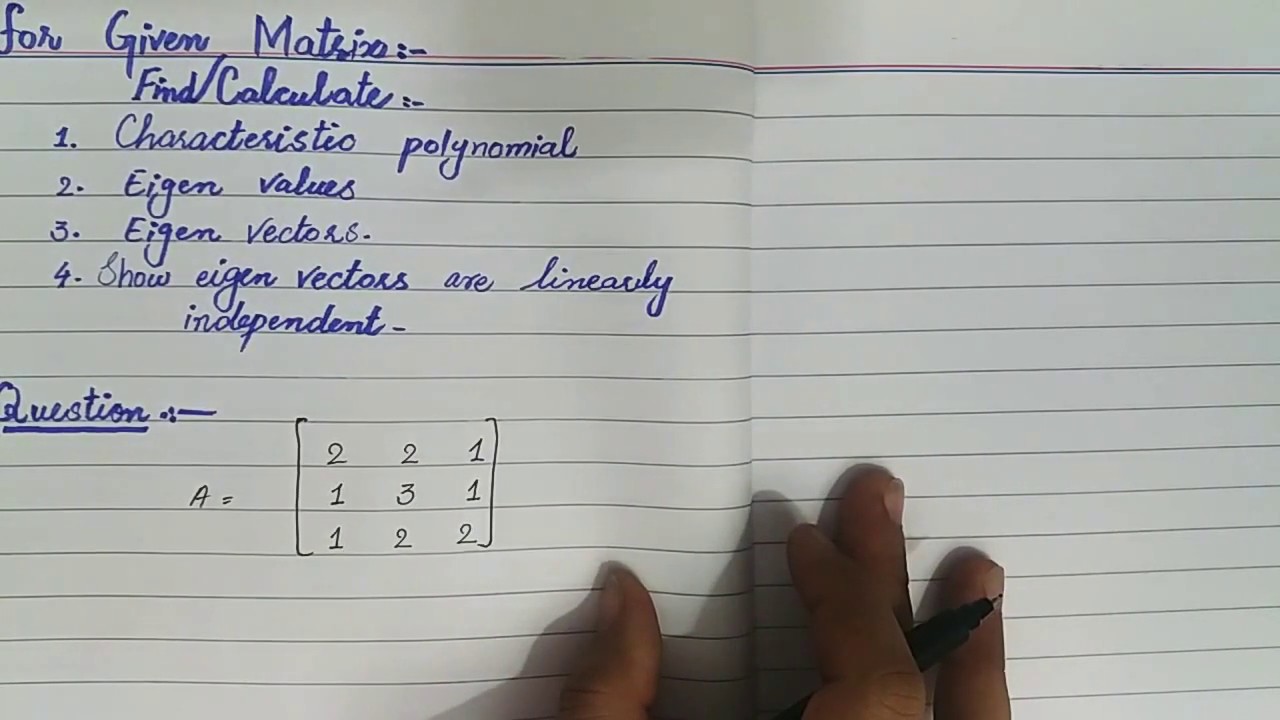

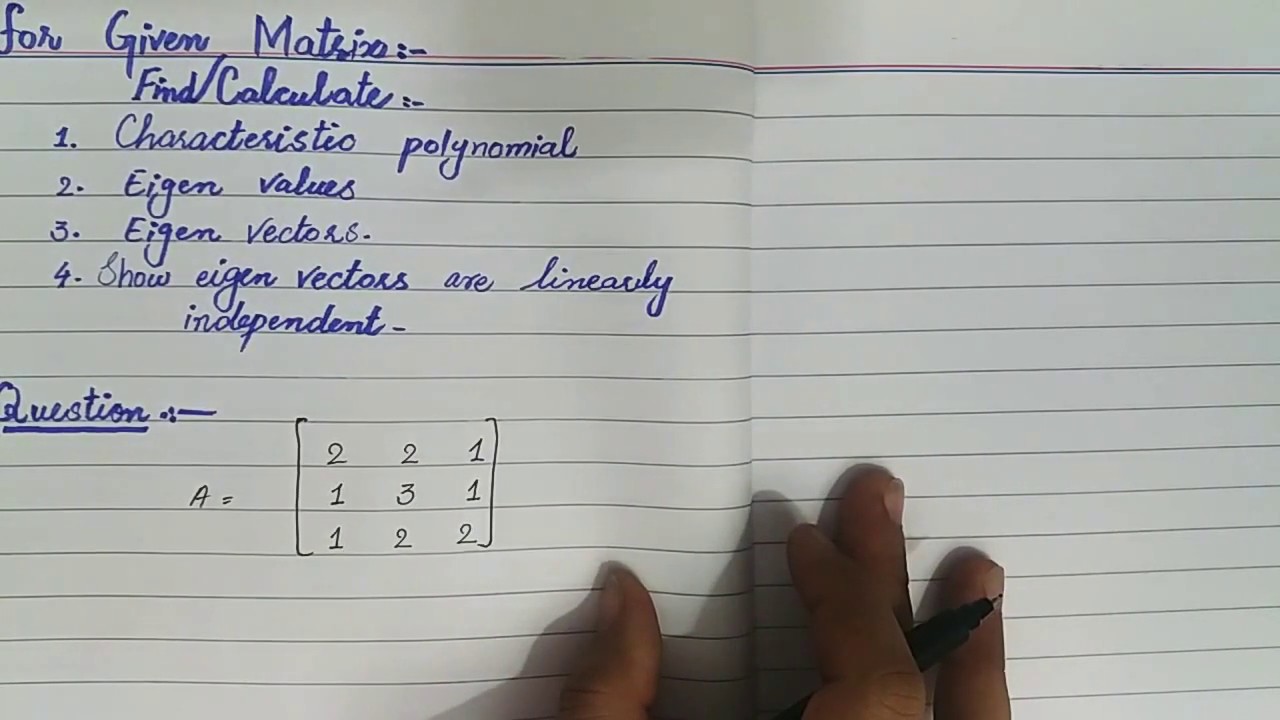

How to find Eigenvalues, Eigenvectors and show vectors are linearly independent

Images related to the topicHow to find Eigenvalues, Eigenvectors and show vectors are linearly independent

Can eigen vectors be linearly dependent?

For example, an eigenvector times a nonzero scalar value is also an eigenvector. Now you have two, and they are of course linearly dependent. Similarly, the sum of two eigenvectors for the same eigenvalue is also an eigenvector for that eigenvalue. The eigenvectors for an eigenvalue form a subspace of the vector space.

How do you find linearly independent vectors?

Solution: Calculate the coefficients in which a linear combination of these vectors is equal to the zero vector. This means that the system has a unique solution x1 = 0, x2 = 0, x3 = 0, and the vectors a, b, c are linearly independent. Answer: vectors a, b, c are linearly independent.

How do you prove something is an eigenvector?

- If someone hands you a matrix A and a vector v , it is easy to check if v is an eigenvector of A : simply multiply v by A and see if Av is a scalar multiple of v . …

- To say that Av = λ v means that Av and λ v are collinear with the origin.

How do you find the eigenvectors of a 2×2 matrix?

- Set up the characteristic equation, using |A − λI| = 0.

- Solve the characteristic equation, giving us the eigenvalues (2 eigenvalues for a 2×2 system)

- Substitute the eigenvalues into the two equations given by A − λI.

Are eigenvectors of the same eigenvalues linearly dependent?

(5) Two distinct eigenvectors corresponding to the same eigenvalue are always linearly dependent. (6) If λ is an eigenvalue of a linear operator T, then each vector in Eλ is an eigenvector of T. (7) If λ1 and λ2 are distinct eigenvalues of a linear operator T, then Eλ1 ∩ Eλ2 = {0}.

Do eigenvectors always form a basis?

The answer to this is “yes”; any basis must consist of n linearly independent vectors. But, it is not always the case that an n×n matrix has n linearly independent eigenvectors.

Can an eigenvalue have two linearly independent eigenvectors?

(It’s possible to have two or more independent eigenvectors with the same eigenvalue. In which case, the eigenvectors form a 2 or more dimensional subspace of eigenvectors.

Can one eigenvalue have multiple independent eigenvectors?

The converse statement, that an eigenvector can have more than one eigenvalue, is not true, which you can see from the definition of an eigenvector. However, there’s nothing in the definition that stops us having multiple eigenvectors with the same eigenvalue.

How to find out if a set of vectors are linearly independent? An example.

Images related to the topicHow to find out if a set of vectors are linearly independent? An example.

Are orthogonal vectors linearly independent?

Orthogonal vectors are linearly independent. A set of n orthogonal vectors in Rn automatically form a basis.

How do you know if a vector is linearly dependent?

If the determinant of the matrix is zero, then vectors are linearly dependent. It also means that the rank of the matrix is less than 3. Hence, for s is equal to 1 and 11 the set of vectors are linearly dependent.

What does it mean when vectors are linearly independent?

A set of vectors is linearly independent if no vector can be expressed as a linear combination of the others (i.e., is in the span of the other vectors). A set of vectors is linearly independent if no vector can be expressed as a linear combination of those listed before it in the set.

What are dependent and independent vectors?

In the theory of vector spaces, a set of vectors is said to be linearly dependent if there is a nontrivial linear combination of the vectors that equals the zero vector. If no such linear combination exists, then the vectors are said to be linearly independent.

How do you find the eigen value of a Eigen vector?

In order to determine the eigenvectors of a matrix, you must first determine the eigenvalues. Substitute one eigenvalue λ into the equation A x = λ x—or, equivalently, into ( A − λ I) x = 0—and solve for x; the resulting nonzero solutons form the set of eigenvectors of A corresponding to the selectd eigenvalue.

Are eigenvectors nonzero?

If we let zero be an eigenvector, we would have to repeatedly say “assume v is a nonzero eigenvector such that…” since we aren’t interested in the zero vector. The reason being that v=0 is always a solution to the system Av=λv. An eigenvalue always has at least a one-dimensional space of eigenvectors.

How do you find the norm of a vector?

The norm of a vector is simply the square root of the sum of each component squared.

How do you find the eigenvectors of a 3×3 matrix?

- Step 1: Enter the 2×2 or 3×3 matrix elements in the respective input field.

- Step 2: Now click the button “Calculate Eigenvalues ” or “Calculate Eigenvectors” to get the result.

- Step 3: Finally, the eigenvalues or eigenvectors of the matrix will be displayed in the new window.

Eigenvectors and linear independence

Images related to the topicEigenvectors and linear independence

How do you find the eigen value of a matrix?

- Step 1: Make sure the given matrix A is a square matrix. …

- Step 2: Estimate the matrix.

- Step 3: Find the determinant of matrix.

- Step 4: From the equation thus obtained, calculate all the possible values of.

- Example 2: Find the eigenvalues of.

- Solution –

How do you find eigenvalues and eigenvectors examples?

Example: Find the eigenvalues and associated eigenvectors of the matrix A = 7 0 −3 −9 −2 3 18 0 −8 . = −(2 + λ)[(7 − λ)(−8 − λ) + 54] = −(λ + 2)(λ2 + λ − 2) = −(λ + 2)2(λ − 1). Thus A has two distinct eigenvalues, λ1 = −2 and λ3 = 1. (Note that we might say λ2 = −2, since, as a root, −2 has multiplicity two.

Related searches

- Determine if the vectors are linearly independent

- how to determine vectors are linearly independent

- linearly independent eigenvectors same eigenvalue

- linearly independent eigenvectors calculator

- how to find linearly independent eigenvectors

- number of linearly independent eigenvectors

- determine the linear independence of the set of following vectors

- are all eigenvectors linearly independent

- how to check if eigenvectors are linearly independent

- how to find if a vectors is linearly independent

- two linearly independent eigenvectors of a are

- Determine the linear independence of the set of following vectors

- if v1 and v2 are linearly independent eigenvectors, then they correspond to distinct eigenvalues

- how to find number of linearly independent eigenvectors

- if v1 and v2 are linearly independent eigenvectors then they correspond to distinct eigenvalues

- eigenvectors of symmetric matrix are linearly independent

- determine linear independence of vectors

- find a maximum set s of linearly independent eigenvectors of a

- determine if the vectors are linearly independent

Information related to the topic how to know if eigenvectors are linearly independent

Here are the search results of the thread how to know if eigenvectors are linearly independent from Bing. You can read more if you want.

You have just come across an article on the topic how to know if eigenvectors are linearly independent. If you found this article useful, please share it. Thank you very much.